KEY POINTS

- Microsoft Bing Chat is introducing DALL-E 3, an advanced version of OpenAI’s tool that converts text descriptions into detailed artwork. The upgraded version is rolling out to select users.

- DALL-E 3 significantly improves upon DALL-E 2 by accurately generating words, labels, and signs within images.

- OpenAI confirmed that DALL-E 3 will be accessible to paid customers of its AI platform in October. However, Bing Chat offers the same DALL-E 3 image creation capabilities for free.

Microsoft Bing Chat is rolling out DALL-E 3, an upgraded version of OpenAI’s text-to-image tool, to a “small group of users”, according to a source at the company.

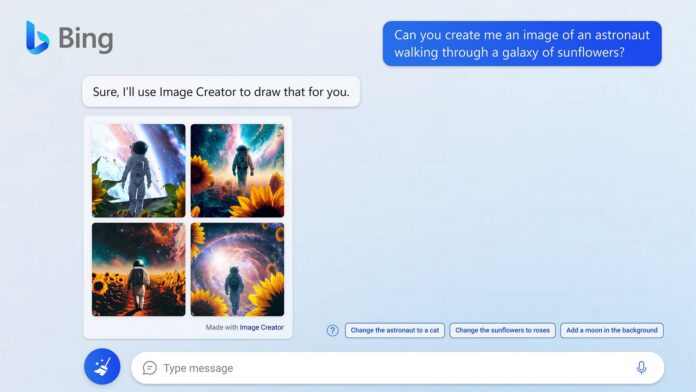

The ChatGPT-powered DALL-E 3 integration is live on one of our Microsoft accounts for Bing Chat, and it works as advertised – you can turn descriptions of images into artwork with more details and text. Unlike DALL-E 2, the new DALL-E 3 is a lot better at creating high-quality artwork, thanks to how it handles text within images.

Microsoft-backed OpenAI’s DALL-E is like a magic box. You can tell the AI to draw any picture, and it will create a unique artwork, but the previous generation model was not good at generating words, labels and signs within images. The new model fixes the text generation problems, and OpenAI can now produce labels or signs more accurately.

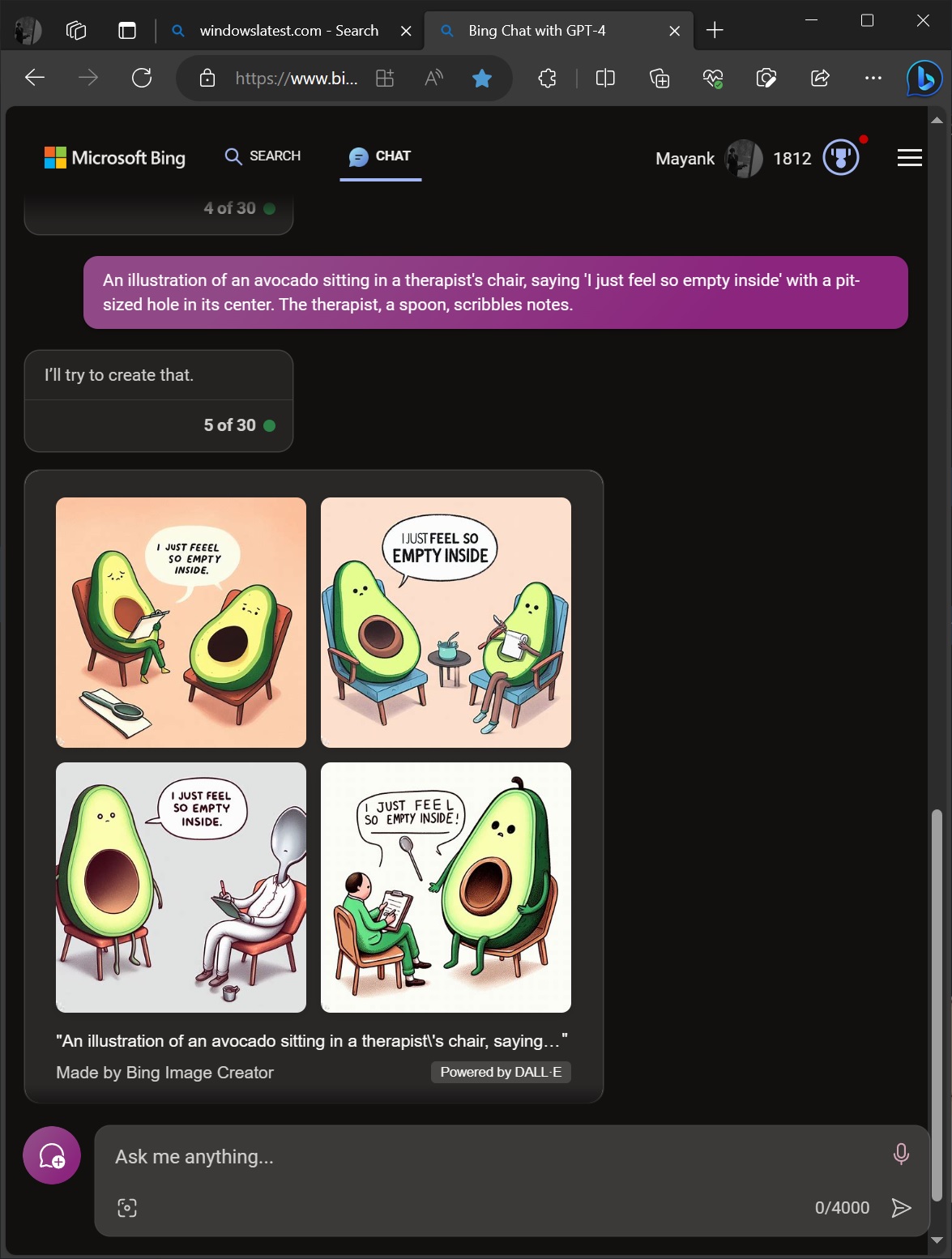

For example, if you ask Bing Chat AI to create “an illustration of an avocado sitting in a therapist’s chair, saying ‘I just feel so empty inside’ with a pit-sized hole in its centre. The therapist, a spoon, scribbles notes”, it can accurately produce the result. The text within the image, “I just feel so empty inside”, also appears correctly.

As you can see in the above screenshot, DALL-E 3 handles text within images significantly better than its predecessor. While DALL-E 2 produces artworks with random alphabets, the new AI model in Bing Chat rendered the image with exact text as described.

The “I just feel so empty inside” is perfectly printed in the image.

We don’t know how many users on Bing Chat have access to DALL-E 3, which isn’t even in ChatGPT yet, but a Microsoft source told us the support is rolling out to a handful of users/accounts.

Yesterday, in a blog post announcing the next generation of text-to-image AI models, OpenAI confirmed that paid customers of its AI platform (ChatGPT Plus and Enterprise) can access DALL-E 3 in October. Once you have subscribed to ChatGPT Plus, you can directly type prompts in ChatGPT and create images.

However, if you can’t wait or do not want to pay for ChatGPT Plus, you can use Bing Chat’s DALL-E 3 image creator, which offers the same functionality at no cost.

If you have access to the early preview of DALLE-3, you should be able to try the new image creator in Bing Chat in Microsoft Edge Canary, which was recently updated with “AI writing on the web” and Bing’s Continue on Phone features.

DALL-E is exceptionally better than the previous models

The new text-to-image AI model offers several quality improvements over its predecessor, which could not handle labels and signs, high-quality image requests and more.

The details are unavailable, but as with the previous models, DALL-E 3 in ChatGPT and Bing Chat is based on the data trained on millions of images of internet creators, photographers, artists, stock images, and more.

“Modern text-to-image systems have a tendency to ignore words or descriptions, forcing users to learn prompt engineering. DALL·E 3 represents a leap forward in our ability to generate images that exactly adhere to the text you provide,” OpenAI noted in the blog post.

The key difference between the new and old models is that DALL-E 3 focuses more on small details like texts, objects and more.